Deep Learning Fundamentals: Understanding the Brain-Inspired Revolution in AI

A Complete Breakdown of Neural Networks and Why They Work

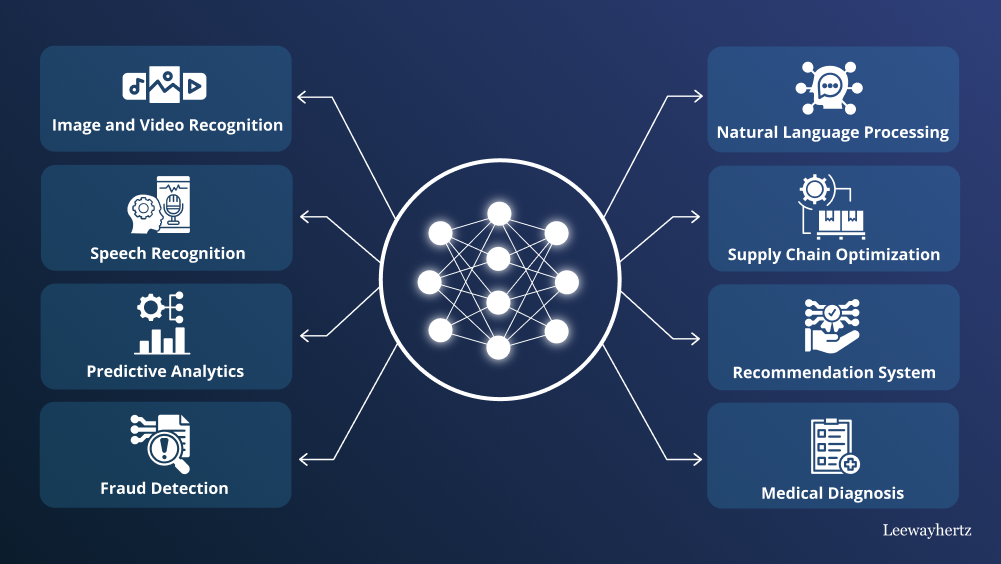

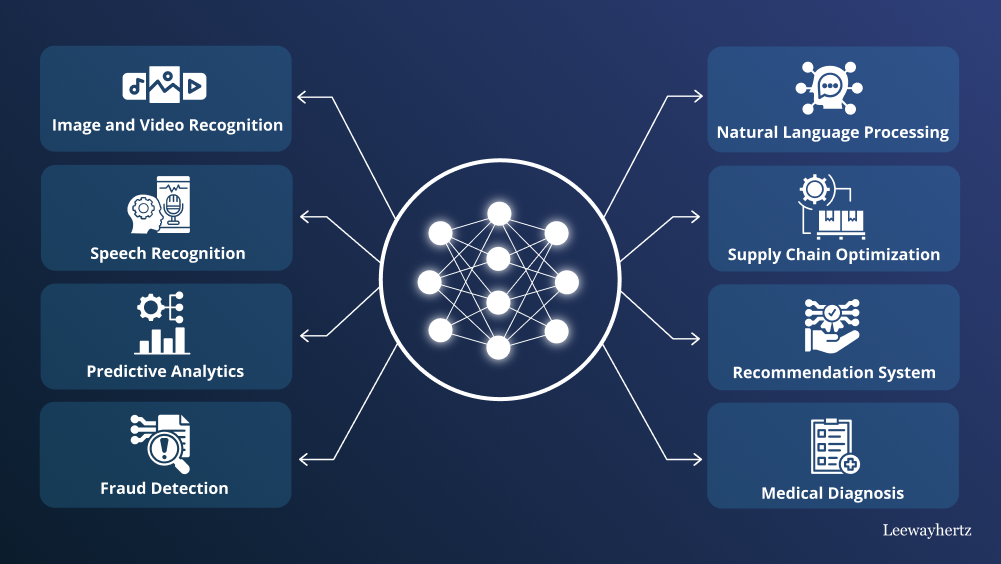

Deep learning is the engine behind computer vision, large language models, robotics, speech recognition, and nearly all modern breakthroughs in AI.

Inspired by the human brain, deep learning systems use layers of artificial neurons to learn hierarchical representations of data.

1. What Makes Deep Learning “Deep”?

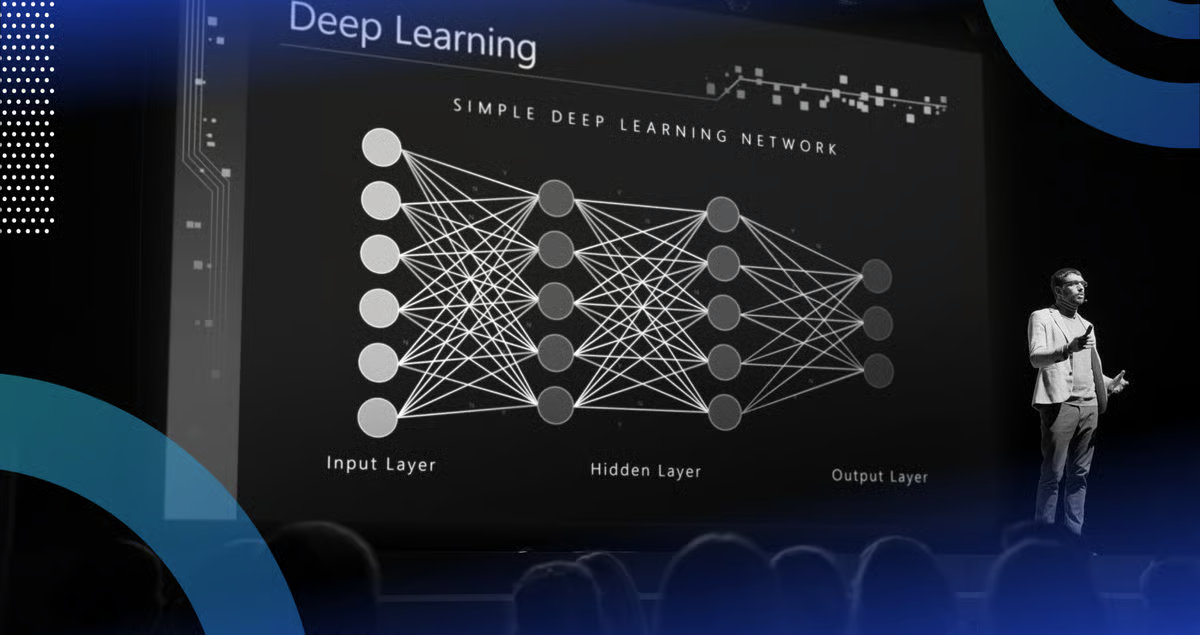

The term “deep” comes from the use of multiple hidden layers. Each layer learns increasingly abstract features.

This hierarchical learning capability makes deep learning so powerful.

2. The Structure of a Neural Network

A neural network consists of:

3. Activation Functions

Activation functions introduce non-linearity, allowing networks to learn complex patterns.

Without these, neural networks would behave like simple linear equations.

4. Training With Gradient Descent

Training a neural network involves adjusting weights to reduce error. This is done using gradient descent and backpropagation.

The model evaluates its output, computes the loss, and updates itself iteratively.

5. Types of Deep Learning Architectures

6. Why Deep Learning Works So Well

Deep learning works exceptionally well because it mimics the way the human brain processes information, using layers of artificial neurons to learn complex patterns from large amounts of data. Unlike traditional machine learning methods, which often rely on manually crafted features, deep learning models automatically extract meaningful representations from raw inputs, whether it’s images, text, or audio. This ability to identify hierarchical patterns like edges in an image or grammatical structures in a sentence allows deep networks to handle tasks that were once considered too difficult for machines.

Another reason deep learning excels is its capacity to scale with data and computational power. As datasets grow larger and GPUs become more powerful, deep networks can learn increasingly subtle patterns, improving accuracy and performance. Additionally, techniques like convolutional layers, recurrent structures, and attention mechanisms enable models to focus on the most relevant features, making them highly adaptable across domains. In essence, deep learning combines flexible architectures, massive data, and efficient computation to achieve remarkable results in areas ranging from natural language processing to computer vision, powering many of the AI applications we rely on today.

7. Challenges and Future Directions

Despite its remarkable successes, deep learning still faces several significant challenges. One of the biggest hurdles is explainability deep networks often operate as “black boxes,” making it difficult to understand why a model arrived at a particular decision. This lack of transparency can be problematic in critical applications such as healthcare, finance, or autonomous systems, where understanding the reasoning behind predictions is essential. Another challenge is generalization. While deep models perform exceptionally well on tasks they are trained on, they can struggle when faced with new, unseen data, leading to errors or unpredictable behavior. Finally, deep learning models are energy-intensive, requiring massive computational resources for training and deployment, which raises concerns about sustainability and accessibility.

Looking ahead, researchers are exploring innovative solutions to overcome these limitations. Sparse networks, which reduce the number of active connections in a model, promise to maintain performance while drastically lowering energy consumption. Neuromorphic computing, inspired by the human brain, aims to create more efficient and adaptive architectures that process information with minimal power. Additionally, the development of models capable of reasoning and abstract thinking, rather than merely pattern recognition, represents a major frontier in AI research. These advances could make deep learning more transparent, versatile, and energy-efficient, unlocking new possibilities in areas like robotics, scientific discovery, and human AI collaboration.